In the United States, building energy use is a serious problem: buildings account for 70 percent of total electricity consumption, with the plurality of the energy consumption incurred by heating and cooling. While much of this is attributed to widespread inefficiencies in building design and use, truly understanding – let alone addressing – building energy use is a serious endeavor, with over 100 million structures in the U.S. alone. A recent article by Rachel Harken from Oak Ridge National Laboratory (ORNL) highlighted how supercomputing can help to tackle that challenge.

As Harken explains it, owing to the onerous process of data collection and organization, building energy modeling is rarely used in practice for new construction or retrofit projects. Now, a team at ORNL is aiming to make a cost-effective building model that could be applied to every building in the country.

The team’s approach automatically extracts “high-level” building descriptors – floor area, orientation, etc. – from public data. A series of simulations then mix those parameters with different possibilities for further, unknown parameters, and the simulation outputs allow the model to match a building’s real energy use with the set of parameters that best matches that use. Using those results, the models can suggest suitable energy efficiency measures and lend insight into where distributed energy (such as local solar) might be a good strategy.

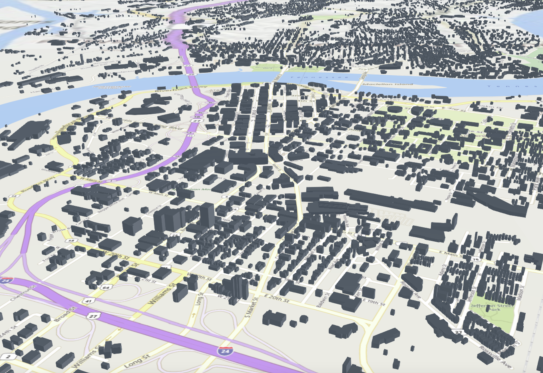

To test their approach, the team focused on just 0.1 percent of the country’s buildings: the nearly 180,000 buildings served by the Electric Power Board (EPB) in Chattanooga, Tennessee. “EPB wanted to see how much money they could save their customers by taking steps to lower energy demand during peak critical hours,” said Joshua New, the team’s leader and an R&D senior staff member at ORNL.

“EPB has 178,368 buildings, and each building model requires around 3,000 inputs,” New said. “If we wanted to include a 15-minute lighting schedule, we would have 35,040 numbers just to tell us if the lights are on or off—and that’s only one input.”

To do this, the team turned to ORNL’s own Titan supercomputer at the Oak Ridge Leadership Computing Facility (OLCF). Titan, which was decommissioned in August, leveraged 18,688 AMD Opteron CPUs and 18,688 Nvidia K20 GPUs to deliver nearly 18 Linpack petaflops. They ran nine different scenarios on Titan for 6.5 hours each, attempting to accurately model existing energy use as well as examining which retrofit approaches had the greatest effects on energy use across the city.

The results, thankfully, validated the team’s approach. Now, the EPB is planning to install smart thermostats in around 200 buildings to help validate the simulation’s predictive results. The team also plans to make the models openly available, hoping that they will help reduce nationwide energy use.